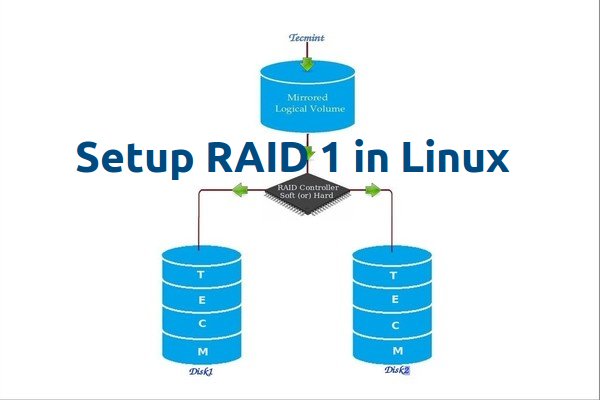

RAID Mirroring means an exact clone (or mirror) of the same data writing to two drives. A minimum two number of disks are more required in an array to create RAID1 and it’s useful only, when read performance or reliability is more precise than the data storage capacity.

Mirrors are created to protect against data loss due to disk failure. Each disk in a mirror involves an exact copy of the data. When one disk fails, the same data can be retrieved from other functioning disk. However, the failed drive can be replaced from the running computer without any user interruption.

My Server Setup

Operating System : Ubuntu 12.04 LTS

IP Address : 192.168.0.195

Hostname : dynamicsvn

Disk 1 [1 TB] : /dev/sdb

Disk 2 [1 TB] : /dev/sdc

Step 1: Installing Prerequisites and Examine Drives

1. As I said above, we’re using mdadm utility for creating and managing RAID in Linux. So, let’s install the mdadmsoftware package on Linux using yum or apt-get package manager tool.

# yum install mdadm [on RedHat systems] # apt-get install mdadm [on Debain systems]

2. Once “mdadm” package has been installed, we need to examine our disk drives whether there is already any raid configured using the following command.

# mdadm -E /dev/sd[b-c]

mdadm : No md superblock detected on /dev/sdb

mdadm : No md superblock detected on /dev/sdc

Step 2: Drive Partitioning for RAID

3. As I mentioned above, that we’re using minimum two partitions /dev/sdb and /dev/sdc for creating RAID1. Let’s create partitions on these two drives using ‘fdisk‘ command and change the type to raid during partition creation.

# fdisk /dev/sdb

Follow the below instructions

- Press ‘n‘ for creating new partition.

- Then choose ‘P‘ for Primary partition.

- Next select the partition number as 1.

- Give the default full size by just pressing two times Enter key.

- Next press ‘p‘ to print the defined partition.

- Press ‘L‘ to list all available types.

- Type ‘t‘ to choose the partitions.

- Choose ‘fd‘ for Linux raid auto and press Enter to apply.

- Then again use ‘p‘ to print the changes what we have made.

- Use ‘w‘ to write the changes.

After ‘/dev/sdb‘ partition has been created, next follow the same instructions to create new partition on /dev/sdc drive.

# fdisk /dev/sdc

4. Once both the partitions are created successfully, verify the changes on both sdb & sdc drive using the same ‘mdadm‘ command and also confirm the RAID type as shown in the following screen grabs.

# mdadm -E /dev/sd[b-c]

Step 3: Creating RAID1 Devices

5. Next create RAID1 Device called ‘/dev/md0‘ using the following command and verity it.

# mdadm --create /dev/md0 --level=mirror --raid-devices=2 /dev/sd[b-c]1 # cat /proc/mdstat

6. Next check the raid devices type and raid array using following commands.

# mdadm -E /dev/sd[b-c]1 # mdadm --detail /dev/md0

Step 4: Creating File System on RAID Device

7. Create file system using ext4 for md0 and mount under /mnt/raid1.

# mkfs.ext4 /dev/md0

8. Next, mount the newly created filesystem under ‘/mnt/raid1‘ and create some files and verify the contents under mount point.

# mkdir /mnt/codebase # mount /dev/md0 /mnt/codebase/

9. To auto-mount RAID1 on system reboot, you need to make an entry in fstab file. Open ‘/etc/fstab‘ file and add the following line at the bottom of the file.

/dev/md0 /mnt/codebase ext4 defaults 0 0

10. Run ‘mount -a‘ to check whether there are any errors in fstab entry.

11. Next, save the raid configuration manually to ‘mdadm.conf‘ file using the below command.

# mdadm --detail --scan --verbose >> /etc/mdadm/mdadm.conf

The above configuration file is read by the system at the reboots and load the RAID devices.

Step 5: Verify Data After Disk Failure

12. Our main purpose is, even after any of hard disk fail or crash our data needs to be available. Let’s see what will happen when any of disk disk is unavailable in array.

# mdadm --detail /dev/md0

root@dynamicsvn:~# mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sat Sep 26 10:53:22 2015

Raid Level : raid1

Array Size : 976760400 (931.51 GiB 1000.20 GB)

Used Dev Size : 976760400 (931.51 GiB 1000.20 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Sep 29 11:42:53 2015

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

Name : dynamicsvn:0 (local to host dynamicsvn)

UUID : d2087ef6:c4a7b644:40ec2320:a5aca46e

Events : 39

Number Major Minor RaidDevice State

0 8 17 0 active sync /dev/sdb1

1 8 33 1 active sync /dev/sdc1

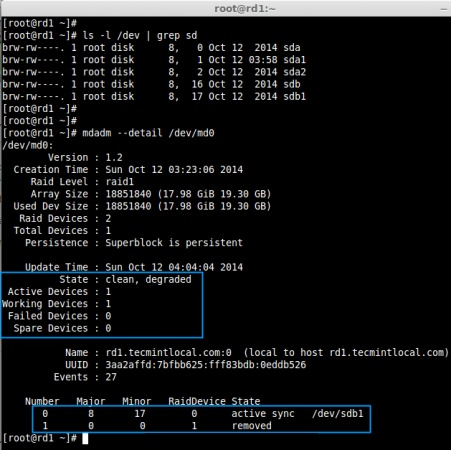

In the above image, we can see there are 2 devices available in our RAID and Active Devices are 2. Now let us see what will happen when a disk plugged out (removed sdc disk) or fails.

# ls -l /dev | grep sd # mdadm --detail /dev/md0

Now in the above image, you can see that one of our drive is lost. I unplugged one of the drive from my Virtual machine. Now let us check our precious data.

# cd /mnt/codebase/ # ls -la dyna_repo

Did you see our data is still available. From this we come to know the advantage of RAID 1 (mirror)?